Deepseek Experiment We are able to All Learn From

페이지 정보

작성자 Tam Taber 작성일 25-02-01 17:25 조회 11 댓글 0본문

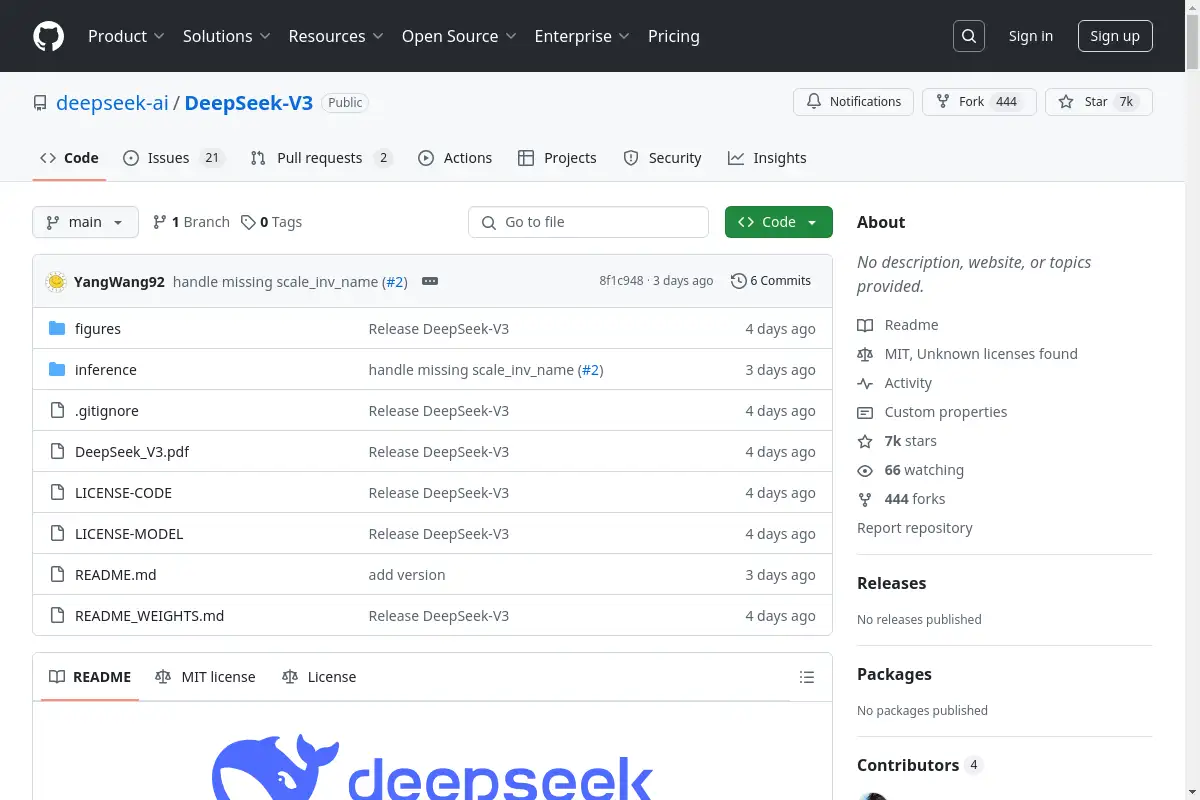

DeepSeekMoE is applied in the most highly effective DeepSeek models: DeepSeek V2 and DeepSeek-Coder-V2. That is exemplified in their DeepSeek-V2 and DeepSeek-Coder-V2 models, with the latter widely regarded as one of many strongest open-supply code fashions accessible. Like many novices, I was hooked the day I constructed my first webpage with fundamental HTML and CSS- a simple web page with blinking text and an oversized image, It was a crude creation, but the fun of seeing my code come to life was undeniable. But, like many models, it faced challenges in computational efficiency and scalability. This means they successfully overcame the earlier challenges in computational efficiency! Their revolutionary approaches to attention mechanisms and the Mixture-of-Experts (MoE) technique have led to impressive efficiency positive factors. This strategy permits fashions to handle different aspects of knowledge more successfully, enhancing efficiency and scalability in giant-scale tasks. This strategy set the stage for a series of rapid model releases.

Even OpenAI’s closed source method can’t forestall others from catching up. ????Open Source! DeepSeek LLM 7B/67B Base&Chat released. How open supply raises the worldwide AI normal, however why there’s likely to all the time be a gap between closed and open-supply models. Let’s explore the particular fashions within the DeepSeek household and the way they handle to do all of the above. The router is a mechanism that decides which knowledgeable (or experts) should handle a specific piece of information or process. Traditional Mixture of Experts (MoE) architecture divides duties among multiple expert models, choosing essentially the most relevant skilled(s) for every enter utilizing a gating mechanism. DeepSeek-V2 introduced another of DeepSeek’s improvements - Multi-Head Latent Attention (MLA), a modified attention mechanism for Transformers that enables faster data processing with less reminiscence usage. Language Understanding: DeepSeek performs properly in open-ended generation tasks in English and Chinese, showcasing its multilingual processing capabilities. DeepSeekMoE is a sophisticated version of the MoE architecture designed to enhance how LLMs handle advanced duties. They handle frequent data that a number of duties would possibly want.

Even OpenAI’s closed source method can’t forestall others from catching up. ????Open Source! DeepSeek LLM 7B/67B Base&Chat released. How open supply raises the worldwide AI normal, however why there’s likely to all the time be a gap between closed and open-supply models. Let’s explore the particular fashions within the DeepSeek household and the way they handle to do all of the above. The router is a mechanism that decides which knowledgeable (or experts) should handle a specific piece of information or process. Traditional Mixture of Experts (MoE) architecture divides duties among multiple expert models, choosing essentially the most relevant skilled(s) for every enter utilizing a gating mechanism. DeepSeek-V2 introduced another of DeepSeek’s improvements - Multi-Head Latent Attention (MLA), a modified attention mechanism for Transformers that enables faster data processing with less reminiscence usage. Language Understanding: DeepSeek performs properly in open-ended generation tasks in English and Chinese, showcasing its multilingual processing capabilities. DeepSeekMoE is a sophisticated version of the MoE architecture designed to enhance how LLMs handle advanced duties. They handle frequent data that a number of duties would possibly want.

You additionally want gifted individuals to function them. An unoptimized model of DeepSeek V3 would wish a bank of high-end GPUs to reply questions at cheap speeds. The freshest mannequin, released by DeepSeek in August 2024, is an optimized model of their open-source model for theorem proving in Lean 4, DeepSeek-Prover-V1.5. This ensures that each task is dealt with by the a part of the mannequin best suited for it. This methodology ensures that the ultimate training knowledge retains the strengths of DeepSeek-R1 whereas producing responses that are concise and efficient. Despite its glorious performance, DeepSeek-V3 requires only 2.788M H800 GPU hours for its full training. In the course of the pre-coaching state, coaching DeepSeek-V3 on each trillion tokens requires only 180K H800 GPU hours, i.e., 3.7 days on our own cluster with 2048 H800 GPUs. Its expansive dataset, meticulous coaching methodology, and unparalleled efficiency throughout coding, mathematics, and language comprehension make it a stand out. You dream it, we make it.

Today, the amount of knowledge that's generated, by each people and machines, far outpaces our ability to absorb, interpret, and make advanced selections primarily based on that information. On prime of these two baseline models, retaining the training knowledge and the opposite architectures the same, we take away all auxiliary losses and introduce the auxiliary-loss-free balancing strategy for comparability. It’s not simply the training set that’s large. 1. Set the temperature within the vary of 0.5-0.7 (0.6 is really useful) to forestall countless repetitions or incoherent outputs. It excels in understanding and responding to a wide range of conversational cues, maintaining context, and providing coherent, relevant responses in dialogues. DeepSeek additionally hires individuals without any pc science background to help its tech better understand a wide range of subjects, per The new York Times. Fact: In a capitalist society, people have the freedom to pay for services they need. Since May 2024, we've been witnessing the development and success of DeepSeek-V2 and deepseek ai-Coder-V2 fashions. CoT and test time compute have been proven to be the long run course of language fashions for better or for worse. This time developers upgraded the earlier model of their Coder and now DeepSeek-Coder-V2 supports 338 languages and 128K context length.

In the event you loved this article and you would love to receive details concerning ديب سيك generously visit our own page.

댓글목록 0

등록된 댓글이 없습니다.