The Meaning Of Deepseek

페이지 정보

작성자 Gia Eklund 작성일 25-02-01 10:12 조회 12 댓글 0본문

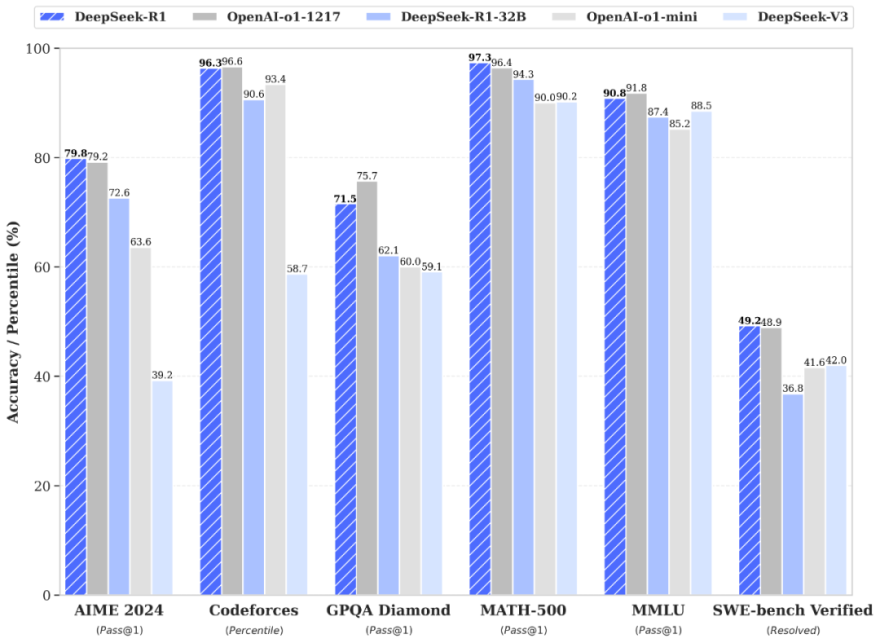

DeepSeek-R1, released by DeepSeek. Like different AI startups, including Anthropic and Perplexity, DeepSeek released various aggressive AI fashions over the previous 12 months which have captured some industry attention. On 9 January 2024, they released 2 DeepSeek-MoE fashions (Base, Chat), each of 16B parameters (2.7B activated per token, deepseek 4K context length). Field, Hayden (27 January 2025). "China's deepseek ai (wallhaven.cc) dethrones ChatGPT on App Store: Here's what it's best to know". Why this matters - asymmetric warfare comes to the ocean: "Overall, the challenges introduced at MaCVi 2025 featured robust entries throughout the board, pushing the boundaries of what is feasible in maritime vision in several different elements," the authors write. Occasionally, niches intersect with disastrous penalties, as when a snail crosses the highway," the authors write. I think I'll make some little project and doc it on the monthly or weekly devlogs until I get a job. As reasoning progresses, we’d mission into increasingly targeted spaces with increased precision per dimension. I also assume the low precision of upper dimensions lowers the compute price so it is comparable to current models.

Remember, while you possibly can offload some weights to the system RAM, it is going to come at a performance cost. I believe the thought of "infinite" energy with minimal cost and negligible environmental influence is something we must be striving for as a folks, but in the meantime, the radical reduction in LLM energy necessities is one thing I’m excited to see. Also, I see people evaluate LLM energy usage to Bitcoin, but it’s value noting that as I talked about in this members’ publish, Bitcoin use is lots of of instances extra substantial than LLMs, and a key difference is that Bitcoin is basically built on utilizing increasingly more power over time, while LLMs will get more efficient as technology improves. I’m probably not clued into this a part of the LLM world, however it’s good to see Apple is putting in the work and the neighborhood are doing the work to get these working nice on Macs. The Artifacts function of Claude internet is great as properly, and is helpful for producing throw-away little React interfaces. That is all nice to hear, although that doesn’t mean the large corporations on the market aren’t massively increasing their datacenter investment within the meantime.

Remember, while you possibly can offload some weights to the system RAM, it is going to come at a performance cost. I believe the thought of "infinite" energy with minimal cost and negligible environmental influence is something we must be striving for as a folks, but in the meantime, the radical reduction in LLM energy necessities is one thing I’m excited to see. Also, I see people evaluate LLM energy usage to Bitcoin, but it’s value noting that as I talked about in this members’ publish, Bitcoin use is lots of of instances extra substantial than LLMs, and a key difference is that Bitcoin is basically built on utilizing increasingly more power over time, while LLMs will get more efficient as technology improves. I’m probably not clued into this a part of the LLM world, however it’s good to see Apple is putting in the work and the neighborhood are doing the work to get these working nice on Macs. The Artifacts function of Claude internet is great as properly, and is helpful for producing throw-away little React interfaces. That is all nice to hear, although that doesn’t mean the large corporations on the market aren’t massively increasing their datacenter investment within the meantime.

I think this speaks to a bubble on the one hand as every executive goes to need to advocate for more investment now, but issues like DeepSeek v3 additionally points in the direction of radically cheaper training sooner or later. I’ve been in a mode of trying tons of new AI instruments for the past year or two, and really feel like it’s helpful to take an occasional snapshot of the "state of things I use", as I anticipate this to proceed to change fairly quickly. Things are altering fast, and it’s necessary to maintain updated with what’s going on, whether or not you want to support or oppose this tech. After all we are doing a little anthropomorphizing however the intuition here is as effectively based as the rest. The positive-tuning job relied on a rare dataset he’d painstakingly gathered over months - a compilation of interviews psychiatrists had done with patients with psychosis, in addition to interviews those same psychiatrists had done with AI methods. The manifold turns into smoother and more exact, best for fantastic-tuning the ultimate logical steps. While we lose a few of that preliminary expressiveness, we achieve the power to make more exact distinctions-good for refining the final steps of a logical deduction or mathematical calculation.

The preliminary excessive-dimensional house supplies room for that kind of intuitive exploration, while the ultimate excessive-precision area ensures rigorous conclusions. Why this issues - a number of notions of management in AI policy get more durable if you happen to need fewer than a million samples to convert any model into a ‘thinker’: Probably the most underhyped part of this release is the demonstration which you could take fashions not educated in any kind of major RL paradigm (e.g, Llama-70b) and convert them into highly effective reasoning models using simply 800k samples from a strong reasoner. Numerous times, it’s cheaper to unravel these issues since you don’t want a number of GPUs. I don’t subscribe to Claude’s pro tier, so I principally use it inside the API console or by way of Simon Willison’s excellent llm CLI device. I don’t have the resources to discover them any further. According to Clem Delangue, the CEO of Hugging Face, one of many platforms hosting DeepSeek’s models, builders on Hugging Face have created over 500 "derivative" models of R1 which have racked up 2.5 million downloads mixed. This time builders upgraded the previous version of their Coder and now deepseek ai china-Coder-V2 helps 338 languages and 128K context size. Deepseek coder - Can it code in React?

댓글목록 0

등록된 댓글이 없습니다.