Using 7 Deepseek Methods Like The pros

페이지 정보

작성자 Velva 작성일 25-02-01 07:46 조회 10 댓글 0본문

If all you wish to do is ask questions of an AI chatbot, generate code or extract text from photographs, then you'll find that at present DeepSeek would seem to fulfill all your needs with out charging you something. Once you're ready, click on the Text Generation tab and enter a prompt to get started! Click the Model tab. If you'd like any customized settings, set them after which click on Save settings for this model adopted by Reload the Model in the top proper. On top of the environment friendly architecture of DeepSeek-V2, we pioneer an auxiliary-loss-free strategy for load balancing, which minimizes the performance degradation that arises from encouraging load balancing. It’s a part of an vital motion, after years of scaling models by elevating parameter counts and amassing larger datasets, towards achieving high performance by spending more power on producing output. It’s worth remembering that you may get surprisingly far with considerably outdated know-how. My previous article went over the way to get Open WebUI arrange with Ollama and Llama 3, however this isn’t the one method I benefit from Open WebUI. DeepSeekMath: Pushing the limits of Mathematical Reasoning in Open Language and AutoCoder: Enhancing Code with Large Language Models are associated papers that discover comparable themes and advancements in the sphere of code intelligence.

This is because the simulation naturally allows the brokers to generate and explore a large dataset of (simulated) medical situations, however the dataset additionally has traces of reality in it through the validated medical records and the general experience base being accessible to the LLMs inside the system. Sequence Length: The size of the dataset sequences used for quantisation. Like o1-preview, most of its efficiency features come from an approach often called check-time compute, which trains an LLM to think at size in response to prompts, using more compute to generate deeper answers. Using a dataset extra acceptable to the mannequin's coaching can enhance quantisation accuracy. 93.06% on a subset of the MedQA dataset that covers major respiratory diseases," the researchers write. Researchers with the Chinese Academy of Sciences, China Electronics Standardization Institute, and JD Cloud have published a language mannequin jailbreaking approach they call IntentObfuscator. Google DeepMind researchers have taught some little robots to play soccer from first-person videos.

This is because the simulation naturally allows the brokers to generate and explore a large dataset of (simulated) medical situations, however the dataset additionally has traces of reality in it through the validated medical records and the general experience base being accessible to the LLMs inside the system. Sequence Length: The size of the dataset sequences used for quantisation. Like o1-preview, most of its efficiency features come from an approach often called check-time compute, which trains an LLM to think at size in response to prompts, using more compute to generate deeper answers. Using a dataset extra acceptable to the mannequin's coaching can enhance quantisation accuracy. 93.06% on a subset of the MedQA dataset that covers major respiratory diseases," the researchers write. Researchers with the Chinese Academy of Sciences, China Electronics Standardization Institute, and JD Cloud have published a language mannequin jailbreaking approach they call IntentObfuscator. Google DeepMind researchers have taught some little robots to play soccer from first-person videos.

Specifically, patients are generated by way of LLMs and patients have specific illnesses primarily based on actual medical literature. For those not terminally on twitter, a lot of people who are massively professional AI progress and anti-AI regulation fly under the flag of ‘e/acc’ (short for ‘effective accelerationism’). Microsoft Research thinks anticipated advances in optical communication - using mild to funnel information round rather than electrons by means of copper write - will doubtlessly change how individuals construct AI datacenters. I assume that almost all people who nonetheless use the latter are newbies following tutorials that have not been updated but or possibly even ChatGPT outputting responses with create-react-app as an alternative of Vite. By 27 January 2025 the app had surpassed ChatGPT as the very best-rated free deepseek app on the iOS App Store in the United States; its chatbot reportedly solutions questions, solves logic problems and writes laptop packages on par with other chatbots in the marketplace, in accordance with benchmark tests utilized by American A.I. DeepSeek vs ChatGPT - how do they evaluate? DeepSeek LLM is a sophisticated language model accessible in both 7 billion and 67 billion parameters.

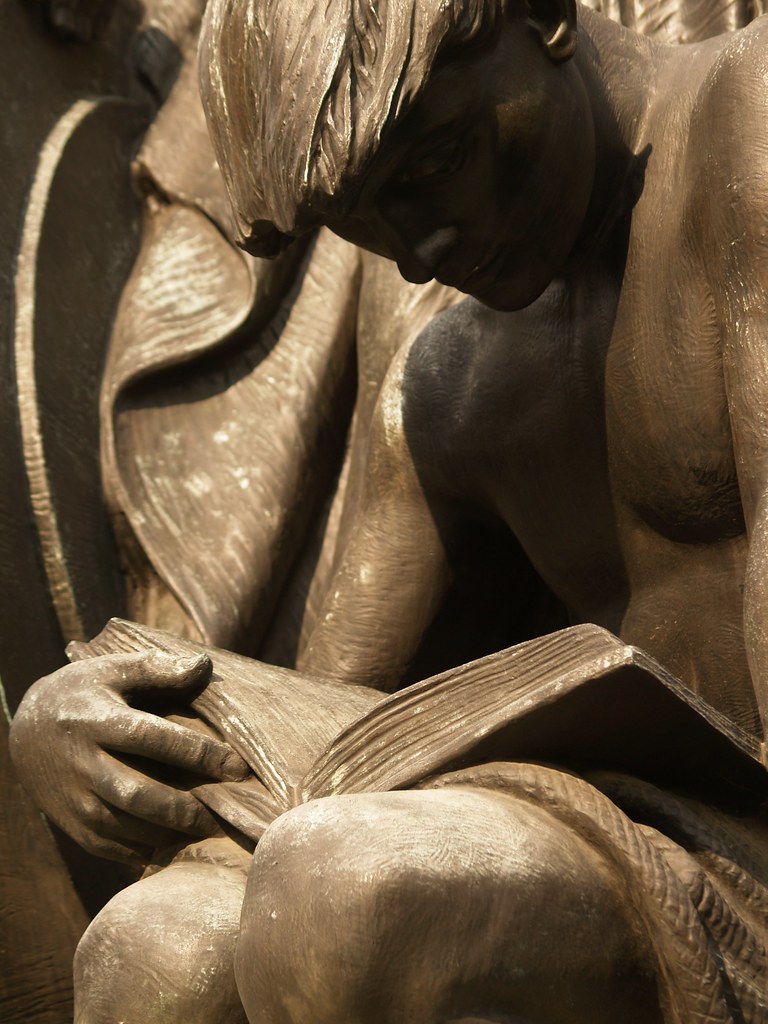

This repo comprises GPTQ model files for DeepSeek's Deepseek Coder 33B Instruct. Note that a lower sequence length doesn't restrict the sequence length of the quantised mannequin. Higher numbers use less VRAM, however have decrease quantisation accuracy. K), a decrease sequence size may have for use. In this revised model, now we have omitted the bottom scores for questions 16, 17, 18, as well as for the aforementioned picture. This cowl picture is the best one I've seen on Dev thus far! Why this is so spectacular: The robots get a massively pixelated image of the world in entrance of them and, nonetheless, are able to mechanically study a bunch of refined behaviors. Get the REBUS dataset right here (GitHub). "In the primary stage, two separate specialists are skilled: one that learns to get up from the ground and another that learns to score towards a fixed, random opponent. Each brings something distinctive, pushing the boundaries of what AI can do.

If you have any sort of concerns relating to where and how you can make use of ديب سيك, you could call us at our own web site.

댓글목록 0

등록된 댓글이 없습니다.