Deepseek Fears Demise

페이지 정보

작성자 Modesto 작성일 25-02-01 04:37 조회 4 댓글 0본문

???? What makes DeepSeek R1 a sport-changer? We introduce an innovative methodology to distill reasoning capabilities from the lengthy-Chain-of-Thought (CoT) model, particularly from one of the DeepSeek R1 sequence models, into standard LLMs, notably DeepSeek-V3. In-depth evaluations have been conducted on the base and chat fashions, evaluating them to current benchmarks. Points 2 and three are basically about my monetary resources that I don't have available for the time being. The callbacks should not so troublesome; I know the way it worked prior to now. I don't actually know the way occasions are working, and it turns out that I needed to subscribe to events in order to send the associated events that trigerred within the Slack APP to my callback API. Getting conversant in how the Slack works, partially. Jog somewhat bit of my reminiscences when trying to combine into the Slack. Reasoning models take a little longer - usually seconds to minutes longer - to arrive at options in comparison with a typical non-reasoning model. Monte-Carlo Tree Search: DeepSeek-Prover-V1.5 employs Monte-Carlo Tree Search to effectively discover the house of potential options. This might have important implications for fields like arithmetic, computer science, and past, by helping researchers and downside-solvers find solutions to difficult issues extra effectively.

???? What makes DeepSeek R1 a sport-changer? We introduce an innovative methodology to distill reasoning capabilities from the lengthy-Chain-of-Thought (CoT) model, particularly from one of the DeepSeek R1 sequence models, into standard LLMs, notably DeepSeek-V3. In-depth evaluations have been conducted on the base and chat fashions, evaluating them to current benchmarks. Points 2 and three are basically about my monetary resources that I don't have available for the time being. The callbacks should not so troublesome; I know the way it worked prior to now. I don't actually know the way occasions are working, and it turns out that I needed to subscribe to events in order to send the associated events that trigerred within the Slack APP to my callback API. Getting conversant in how the Slack works, partially. Jog somewhat bit of my reminiscences when trying to combine into the Slack. Reasoning models take a little longer - usually seconds to minutes longer - to arrive at options in comparison with a typical non-reasoning model. Monte-Carlo Tree Search: DeepSeek-Prover-V1.5 employs Monte-Carlo Tree Search to effectively discover the house of potential options. This might have important implications for fields like arithmetic, computer science, and past, by helping researchers and downside-solvers find solutions to difficult issues extra effectively.

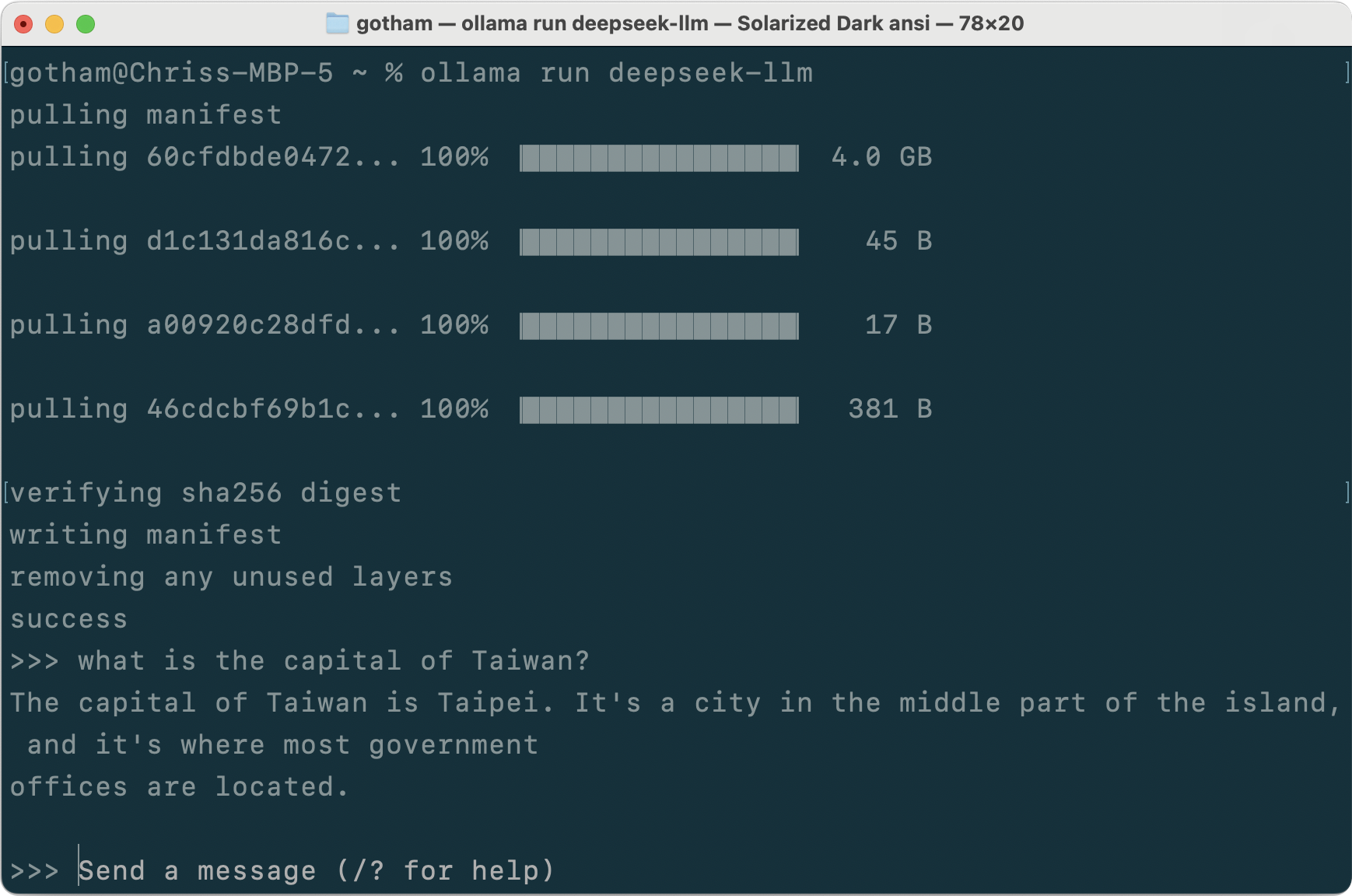

This innovative approach has the potential to vastly speed up progress in fields that depend on theorem proving, equivalent to arithmetic, computer science, and past. However, further analysis is required to address the potential limitations and explore the system's broader applicability. Whether you are a knowledge scientist, enterprise chief, or tech enthusiast, DeepSeek R1 is your final device to unlock the true potential of your information. U.S. tech giant Meta spent building its latest A.I. Is deepseek ai’s tech pretty much as good as systems from OpenAI and Google? OpenAI o1 equivalent locally, which isn't the case. Synthesize 200K non-reasoning knowledge (writing, factual QA, self-cognition, translation) utilizing DeepSeek-V3. ’s capabilities in writing, function-enjoying, and different basic-function tasks". So I started digging into self-internet hosting AI models and quickly found out that Ollama could assist with that, I also regarded through various different methods to start out utilizing the vast quantity of fashions on Huggingface however all roads led to Rome.

This innovative approach has the potential to vastly speed up progress in fields that depend on theorem proving, equivalent to arithmetic, computer science, and past. However, further analysis is required to address the potential limitations and explore the system's broader applicability. Whether you are a knowledge scientist, enterprise chief, or tech enthusiast, DeepSeek R1 is your final device to unlock the true potential of your information. U.S. tech giant Meta spent building its latest A.I. Is deepseek ai’s tech pretty much as good as systems from OpenAI and Google? OpenAI o1 equivalent locally, which isn't the case. Synthesize 200K non-reasoning knowledge (writing, factual QA, self-cognition, translation) utilizing DeepSeek-V3. ’s capabilities in writing, function-enjoying, and different basic-function tasks". So I started digging into self-internet hosting AI models and quickly found out that Ollama could assist with that, I also regarded through various different methods to start out utilizing the vast quantity of fashions on Huggingface however all roads led to Rome.

We can be using SingleStore as a vector database here to store our knowledge. The system will attain out to you inside 5 enterprise days. China’s DeepSeek team have constructed and launched DeepSeek-R1, a mannequin that uses reinforcement learning to train an AI system to be ready to use check-time compute. The important thing contributions of the paper embody a novel strategy to leveraging proof assistant suggestions and advancements in reinforcement studying and search algorithms for theorem proving. Reinforcement learning is a sort of machine studying the place an agent learns by interacting with an environment and receiving suggestions on its actions. deepseek (Source Webpage)-Prover-V1.5 is a system that combines reinforcement studying and Monte-Carlo Tree Search to harness the suggestions from proof assistants for improved theorem proving. DeepSeek-Prover-V1.5 goals to address this by combining two powerful techniques: reinforcement studying and Monte-Carlo Tree Search. It is a Plain English Papers summary of a research paper referred to as deepseek ai-Prover advances theorem proving via reinforcement studying and Monte-Carlo Tree Search with proof assistant feedbac. This suggestions is used to replace the agent's policy and information the Monte-Carlo Tree Search course of.

An intensive alignment process - significantly attuned to political risks - can certainly information chatbots toward generating politically acceptable responses. So after I discovered a model that gave fast responses in the fitting language. I started by downloading Codellama, Deepseeker, and Starcoder however I discovered all the fashions to be pretty sluggish at the least for code completion I wanna point out I've gotten used to Supermaven which focuses on fast code completion. I'm noting the Mac chip, and presume that is fairly fast for running Ollama right? It is deceiving to not particularly say what model you are running. Could you've got more benefit from a bigger 7b mannequin or does it slide down a lot? While there is broad consensus that DeepSeek’s release of R1 at the least represents a big achievement, some distinguished observers have cautioned towards taking its claims at face worth. The callbacks have been set, and the occasions are configured to be despatched into my backend. All these settings are something I will keep tweaking to get one of the best output and I'm additionally gonna keep testing new fashions as they become accessible. "Time will tell if the DeepSeek risk is actual - the race is on as to what expertise works and how the massive Western players will respond and evolve," stated Michael Block, market strategist at Third Seven Capital.

댓글목록 0

등록된 댓글이 없습니다.